I’m back. It’s time for me to post some screenshots and stuff of my initial FXAA implementation.

And also share the downsides of this experience so far.

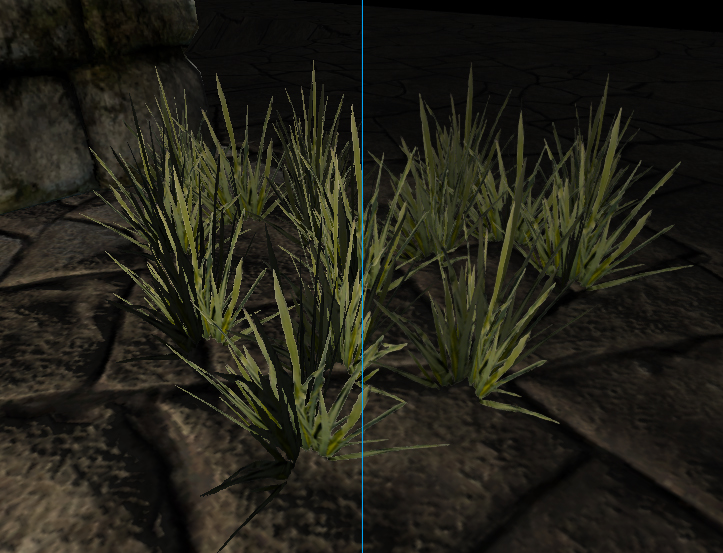

So without further ado, let’s take a look at the samples I prepared. I decided on a very dreaded example of aliasing… Vegetation!

(And just as a reminder: This is using the default preset for FXAA (‘preset 12’ to be precise) and everything is left as defaults so I have not tweaked it at all.)

(You can also click the images for larger size)

(Oh another thing: I think it’s a little confusing that the comparison shots don’t match, I apologize for that.)

This is “zoomed in”. Really just the same image cropped and re-sized in Photoshop using nearest neighbor filtering so pixel hardness is intact.

And here’s the last one, a “long shot”. Image taken from further away to see the mipmap take effect.

Now then. Let’s move on to me just typing a bunch of nonsense.

First of all, I’m not particularly into anti aliasing as it is, and I don’t have a lot of experience with using it in any capacity.

It’s something that has fallen off my radar for the longest of time.

The part of my free time that I spend playing video games is in turn spent playing pretty dated games which often don’t have anti aliasing at all. So due to that it doesn’t often cross my mind when I play a game.

So anyway- I’ve read up on FXAA more thorougly now and I’m very much intrigued by it, partially because of its simplicity (took me less than half an hour to get it to work) and other very nice details such as its portability.

Mostly I’m intrigued by it because it’s performed in software, meaning it really doesn’t have to be rooted to the engine very deeply in order to work.

Like there are FXAA shaders for engines that don’t necessarily deploy it natively but are able to use it because they support custom post processing effects. Unreal Engine 3 comes to mind. (If I recall correctly there should be a FXAA shader for it somewhere…)

I briefly mentioned “downsides” on the second line of this post and that has to do with dips in the overall performance of my engine. That’s right; my engine finally suffered its first performance drop since its inception!

Given, it’s not a huge drop mind you, but just enough to get me concerned.

And I know what you’re thinking. It’s not just the FXAA. I actually think my HDR bloom is the real culprit, it’s pretty heavy on samples. I’m definitely going to need to trim that down a tad. Blurring is always expensive.

But all in all. I’m satisfied so far. I’m going to keep poking around with this now soooo… See you later! 🙂

Edit: OK… I forgot that I was in fact alpha testing manually in my fragment shader, which isn’t that fast. So the performance drop must’ve coincided when I started throwing those grass patch models around the scene. In fact: looking down at one of them drops my framerate significantly. So I think both my bloom and FXAA are off the hook for that one… Oops.